Esther Chan is First Draft's Australia Bureau Editor. aims to empower people with knowledge and tools to build resilience against harmful, false, and misleading information.

This is the second in a two-part blog series- read part one about election disinformation tactics and strategies here.

During elections, journalists increasingly need to monitor, detect and debunk mis- and disinformation. First Draft offers essential guides and regular training workshops to advise journalists and researchers on how to set up , the of user-generated content (UGC) using open-source intelligence (OSINT) tools, and tips to explore .

Debunking and fact checking after mis- or disinformation has already had an impact are useful ways to help stop the spread. Before problematic information gains traction, another way to “” as John Cook, Stephan Lewandowsky and Ullrich K. H. Ecker advocated, is prebunking.

Prebunking is “the process of debunking lies, tactics or sources before they strike,” because prevention is more impactful than cure. on what and how to prebunk include anticipating what information people need and identifying a data void — a subject where credible information is lacking — drawing out the specific at play of how the disinformation is spread, warning your audience and wrapping your prebunks in a .

Collaboration among media organisations is key for prebunking to be successful in guarding against harmful misinformation. Signal-sharing between journalists and newsrooms is crucial as they pool together resources to foresee and prepare their audience for possible election-related mis- and disinformation. Prebunking can be made even more effective by a multi-pronged approach involving academics, communicators, social media platforms, policymakers and the wider communities, including strategies such as for the public to submit tips about misleading or false content related to the elections.

We launched our latest project ahead of the federal election. Our disinformation researchers provide daily alerts and prebunks on election misinformation that this group can feature in their reports, discuss with each other and deepen their own investigation. A bespoke that helps build resilience against online threats and tactics of manipulation.

Avoid amplification

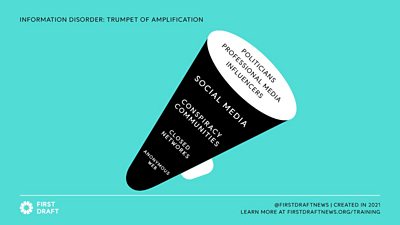

Once the inaccurate or false information is identified, . That depends on where the information sits on the , which shows the path disinformation often takes online.

More often than not, the risks and consequences that come with publishing mis- and disinformation can make journalists and researchers complicit in the spread of disinformation. As First Draft co-founder Claire Wardle pointed out: “Unfortunately at this point, it often moves into the professional media. This might be when a false piece of information or content is embedded in an article or quoted in a story without adequate verification. But it might also be when a newsroom decides to publish a debunk or expose the primary source of a conspiracy. Either way, the agents of disinformation have won. Amplification, in any form, was their goal in the first place.”

Before publishing inaccurate, misleading, false or harmful content, ask yourself these questions to avoid amplification:

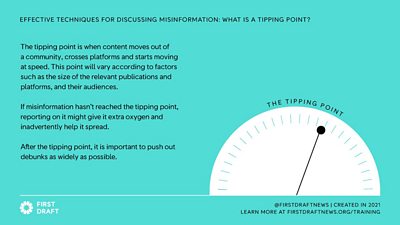

- Consider the impact and virality of the content: engagement, interactions, the number of platforms and languages where it has appeared, whether it has spilled over the “tipping point”.

- Decide whether you need to include the links, visuals, source and the platforms where you spotted the problematic content in your reporting. Doing so sometimes only attracts further attention to the misinformation.

- If choosing to feature visuals that contain mis- and disinformation, apply an , or a visual filter, with prominent warnings and watermarks that travel with the images to prevent further amplifying harmful content.

- Provide in the overlays and in your coverage that explain the type of disinformation involved. See examples .

- Provide context that helps your audience connect the dots and make sense of the bigger picture, including possible motivations behind the disinformation and potential threats.

- Provide service journalism pieces with clear headlines that lead with facts and not falsehoods.

- Avoid judgment or accusatory language. A and a focus on the lesson to be learned from a media literacy point of view is far more powerful in stopping the spread of misinformation.

What to expect

Tactics and strategies to spread election misinformation evolve day by day, and the trends we expect to see in 2022 include a focus on domestic narratives, homegrown actions inspired by popular rhetoric overseas, exploitation of communities, disinformation being shared in multiple languages, as well as and the use of offline outreach, including letterbox drops, posters, banners, leaflets and billboards as well as TV and .

These efforts can also be reinforced by social media platforms’ algorithms and the echo chambers these online spaces create, so ahead of any elections, effective prebunking, a multi-pronged approach that ensures all stakeholders are involved in the fight against disinformation, and ongoing media literacy training are crucial.

- First Draft Part One: Election disinformation tactics and strategiesIn the first of a two part blog on disinformation and elections from First Draft, Esther Chan writes about how journalists can tackle the problem.

- How not to amplify bad ideasMike Wendling, Editor of ����ý Trending, describes the problem of amplification and how to avoid it.

- EBU view: 100 years of public service mediaThe EBU's Noel Curran writes about the role members play in protecting democracy

- Facing the information apocalypseRadio-Canada's Jeff Yates writes about his role tackling disinformation