Welcome to our fourth post on the work we’re doing with software development agency . They're helping us produce a browser-based vision mixing interface to support low-cost production run by a single operator. This blog post is written by Isotoma's Doug Winter and is the 4th part of their ongoing series about working with the ����ý Research & Development team. If you’re new to this project, you should start at the beginning!

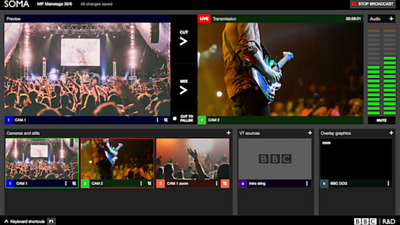

Like all vision mixers, SOMA (Single Operator Mixing Application) has a “preview” and “transmission” monitor. Preview is used to see how different inputs will appear when composed together – in our case, a video input, a “lower third” graphic such as a caption which fades in and out, and finally a “DOG” such as a channel or event identifier shown in the top corner throughout a broadcast.

When switching between video feeds SOMA offers a fast cut between inputs or a slower mix between the two. As and when edit decisions are made, the resulting output is shown in the transmission monitor.

The problem with software

However, one difference with SOMA is that all the composition and mixing is simulated. SOMA is used to build a set of edit decisions which can be replayed later by a broadcast quality renderer. The transmission monitor is not just a view of the output after the effects have been applied as the actual rendering of the edit decisions hasn’t happened yet. The app needs to provide an accurate simulation of what the edit decision will look like.

The task of building this required breaking down how output is composed – during a mix both the old and new input sources are visible, so six inputs are required.

VideoContext to the rescue

Enter , a video scheduling and compositing library created by ����ý R&D. This allowed us to represent each monitor as a graph of nodes, with video nodes playing each input into transition nodes allowing mix and opacity to be varied over time, and a compositing node to bring everything together, all implemented using WebGL to offload video processing to the GPU.

The flexible nature of this library allowed us to plug in our own WebGL scripts to cut the lower third and DOG graphics out using chroma-keying (where a particular colour is declared to be transparent – normally green), and with a small patch to allow VideoContext to use streaming video we were off and going.

Devils in the details

The fiddly details of how edits work were as fiddly as expected: tracking the mix between two video inputs versus the opacity of two overlays appeared to be similar problems but required different solutions. The nature of the VideoContext graph meant we also had to keep track of which node was current rather than always connecting the current input to the same node. We put a lot of unit tests around this to ensure it works as it should now and in future.

By comparison a seemingly tricky problem of what to do if a new edit decision was made while a mix was in progress was just a case of swapping out the new input, to avoid the old input reappearing unexpectedly.

QA testing revealed a subtler problem that when switching to a new input the video takes a few tens of milliseconds to start. Cutting immediately causes a distracting flicker as a couple of blank frames are rendered – waiting until the video is ready adds a slight delay but this is significantly less distracting.Later in the project a new requirement emerged to re-frame videos within the application and the decision to use VideoContext paid off as we could add an effect node into the graph to crop and scale the video input before mixing.

And finally...

VideoContext made the mixing and compositing operations a lot easier than they would have been otherwise. Towards the end we even added an image source (for paused VTs) using the new experimental Chrome feature captureStream, and that worked really well.

After making it all work the obvious point of possible concern is performance, and overall it works pretty well. We needed to have half-a-dozen or so VideoContexts running at once and this was effective on a powerful machine. Many more and the computer really starts struggling.

Even a few years ago attempting this in the browser would have been madness, so its great to see such a lot of progress in something so challenging, and opening up a whole new range of software to work in the browser!

- -