Over the past few years, 大象传媒 R&D has been leading the way in creating new kinds of audience experiences. provided a personalised and interactive cooking experience, presented you with more relevant news stories and produced videos of variable length.

All of these experiences were made possible using a technique called . Producing and delivering object-based content is difficult at the moment because the tools and protocols we need to achieve this are not yet readily available. To help fix this problem, we are involved in - an EU project that aims to create an end-to-end object-based broadcast chain for audio.

Today we are launching . We created this programme using the tools and protocols we developed as part of ORPHEUS, to see whether we could successfully use them as part of a real production. To make the most of the possibilities offered by this new technology, we commissioned a writer to create a radio play especially for the project. We then produced the drama by working in collaboration with 大象传媒 Radio Drama London.

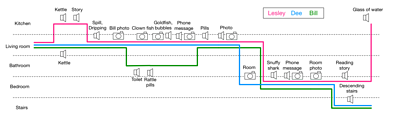

In the drama, you can choose to follow one of three characters. As the characters move around, you will hear their different perspectives on the story, which may influence your opinion of what really happened. The story and characters are illustrated with images that are triggered at specific times throughout the programme. We made the drama in immersive 3D audio, and you can listen in stereo, surround sound or with binaural audio on headphones.

The 大象传媒 has created interactive radio dramas before, such as and . However, there are several things that make The Mermaid's Tears special. Firstly, the drama is streamed to the listener as a collection of individual audio objects, with instructions for the receiver on how to mix them together for each character. This means we can broadcast one set of content but create three different experiences. Secondly, we broadcast a version of the programme live to the web, which we believe was the world's first live interactive object-based drama. To do this, we needed to design and build our own custom production tools that were capable of producing three experiences simultaneously.

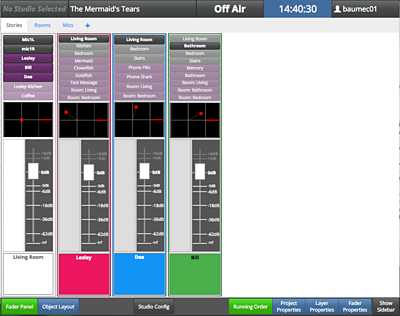

We created this programme using our object-based radio studio in 大象传媒 Broadcasting House, which we started building last summer. The studio is built around R&D's platform, which gives us the flexibility we need to produce and broadcast object-based audio. Traditional studios use expensive audio equipment and have limits on the number of channels we can use. IP Studio allows us to use low-cost commodity IT hardware, and to control and process multiple audio channels using custom software. For playback, we installed a 鈥�4+7+0鈥� speaker setup in the studio so we could mix the audio in three dimensions.

With object-based audio, the sound is mixed on the listener's device (in this case, a web browser). You will see in the photo above that there is no mixing desk in the studio. This is because we developed a custom 'audio control interface' that does not process the audio, but generates a stream of metadata describing the sound mix for each character. We then send that steam alongside the audio to the listener's device, which mixes the audio for whichever character they want to listen to.

The '' (or ADM) is an existing for object-based audio files that we helped to write. We are also involved in developing a new follow-up standard for streaming, which we call 'Serialised ADM'. For The Mermaid's Tears, we used Serialised ADM to transmit the metadata that describes the mix for each character. Another metadata stream triggers the images in the web browser.

The Mermaid's Tears is the first of two pilot programmes we are producing as part of the ORPHEUS project. For our next pilot, we will be experimenting with how to produce and deliver non-linear object-based audio, such as variable depth programmes. To do this, we plan to use R&D's .

These two pilot programmes are feeding into a wider body of work within ORPHEUS. As we learn more about object-based broadcasting, we are designing a reference architecture and writing the standards and guidelines on how best to implement object-based audio. By publishing these, we hope to inspire and assist other broadcasters to adopt and implement this exciting new technology.

Credits:

Production team

- Producer/Director: Jessica Mitic

- Writer: Melissa Murray

- Sound Designer: Caleb Knightley

- Graphic Design: Jacob Phillips

Technical team

- Director: Chris Baume

- Production system: Matthew Firth

- Distribution and rendering: Matthew Paradis

- Studio build and support: Andrew Mason

- Studio rendering: Richard Taylor

- User interface: Andrew Nicolaou

Cast

- Lesley: Sarah Ridgeway

- Dee: Chetna Pandya

- Bill: Simon Ludders

- Sally: Lara Laight

Thanks to

- James Sandford

- David Marston

- Rob Wadge

- Jon Tutcher

- Chris Roberts

- Tom Parnell

- Peter Taylour

- Ben Robinson

Learn more about this project by reading the many that we've published on the ORPHEUS website.

- -

- 大象传媒 R&D - Talking with Machines

- 大象传媒 R&D - Singing with Machines

- 大象传媒 R&D - Better Radio Experiences

- 大象传媒 R&D - Responsive Radio

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.