Following the release of The Inspection Chamber last year, our voice-interactive sci-fi audio drama for smart speakers, we carried out a detailed user study to gauge how people feel about interactive audio productions.

One interesting wrinkle was about the level of interaction people wanted with their audio content. There was a split between people who wanted more interaction - something more like a game - and people who didn鈥檛 like interacting with a story at all, once it was underway - more like traditional radio. Even in the second group, there was still an appetite for stories which could change and do interesting things - just not necessarily in a loop of constant interaction.

While we were thinking about this split, I happened to have a chat with creative technologist and friend-of-R&D , who mentioned 大象传媒 Radio 3鈥檚 2010 dramatisation of The Unfortunates, originally a 1969 experimental novel by B.S. Johnson. We dug into it a little more, and found a perfect example of a story we could bring to smart speakers, creating something which would sound and interact like a traditional radio programme but also take advantage of new technology.

Johnson鈥檚 novel was famously published as a 鈥榖ook in a box鈥� - 27 unbound sections in a box, intended to be shuffled and read in a new order every time the reader picked it up. Only the first and last sections were intended to be read in a fixed position, and were labelled as such. The story follows a sports journalist whose memories of a dear, dead friend are triggered when he is sent to report on a football match, and the randomness of the book is designed to mimic the way the mind jumps between recollections and connections when lost in thought.

When adapted the book for the award-winning dramatisation, which starred Martin Freeman, it was also made in sections - 17 parts, which were kept separate through production. The broadcast order was picked live on air in an edition of The Verb, and the broadcast went out the following Sunday. Radio being a linear medium, this meant that this broadcast version was now 鈥榝rozen鈥� in one order - the randomness had been lost. The separate parts were, however, made available on the Radio 3 website.

Near the beginning of this year, I collaborated on a quick prototype with 大象传媒 R&D alumnus Tom Howe, chopping up the Radio 3 broadcast back up into its parts and building a player for Alexa which shuffled those parts into a new order - essentially, a randomised playlist. We then took that version to a meeting with the Radio 3 creative team - Graham and producer Mary Peate - who had no idea what we鈥檇 been up to!

Luckily, they loved what we鈥檇 done to their show, and were very supportive of our new treatment. This meant that the hard work of building out our prototype into a full skill could begin.

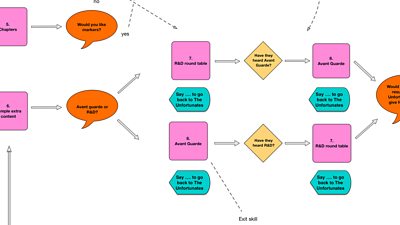

One of the most challenging parts of building an application for smart speakers - certainly more challenging than writing the software itself - is designing the conversation flow through the application, mapping out what the device is going to say and anticipating what users might say in response and to make requests for functionality. Our application ended up having three strands of content: the main story, The Advance Guard of the Avant-garde (a documentary about 1960s experimental literature), and a behind-the-scenes making-of discussion (like a director鈥檚 commentary on a DVD). At any time, the user could either be listening to one of these strands of content or re-entering the skill and navigating their way to one of the other strands. The nature of voice commands means that the user can interrupt any of these modes and jump to another state at any time, and the nature of the devices means that use sessions might be in one long chunk, or paused and resumed over a day, or several days. The system needs to be able to deal with all of these possible states and address the user appropriately, and even in a relatively small skill like this, the permutations can stack up. This requires a lot of thinking upfront to try to anticipate and design for these states. A useful way to capture these design decisions is in a VUX diagram - essentially, a flow chart for conversations. We鈥檝e included a version of the VUX diagram for The Unfortunates here.

Excerpt of the user experience flow in The Unfortunates - click for full version

Our other main challenge was managing the sheer number of audio assets necessary to render the skill鈥檚 interface. We decided early on that using the system Alexa voice wouldn鈥檛 work for this skill - we needed to use a human voice to talk to the listener. We wrote a script for the skill, derived from the VUX flow work, which broke down into 48 separate spoken chunks. We then had to record, edit, master, encode and upload those sections of speech while keeping them tied to the script so that the skill could play the correct chunk of speech according to where the user was in the conversation flow. We ended up writing a set of simple tools to do this that relied on a master script held in a shared spreadsheet. As audio assets moved along the production pipeline, we edited and updated the spreadsheet, which was then read by the tools to generate data files and encode and upload those assets.

When you think of an Alexa skill you don鈥檛 necessarily think of visuals, but we do need artwork to represent the sections of the main story on , which have screens. Andrew and Joanna created some beautiful artwork, using abstract photography and projection to create images that reflect the feel of each section without destroying the ambiguity of the piece. Ant did a lovely job of integrating that artwork into the skill, using Amazon's new where available and now-playing track metadata.

We鈥檙e really happy with the way the skill鈥檚 turned out - there鈥檚 something very pleasing about going back to a production that was limited by the technology of its time and re-presenting it in a way that鈥檚 closer to both the creative intent of the Radio 3 team and the spirit of Johnson鈥檚 original book. In an interview excerpted in The Advance Guard of the Avant-garde, he says that 鈥榯he randomness of the material was directly in conflict with the book as a technological object鈥�. We hope that by using the randomness available to us in a new technological object, we have built on Graham & Mary's wonderful treatment of the work in a way that Johnson would have felt does the material justice.

Additionally, by including extra material along with the main story, we鈥檙e able to test the idea of using one programme as a jumping-off point to explore further content, something which will be useful to our friends in .

Our version of The Unfortunates is free to use. You can find it by or on 大象传媒 Taster.

Thanks to the whole Talking with Machines team: Joanna, Andrew, Ant, Henry and Nicky. Thanks also to Tom Howe, Caroline Alton, Mary Peate and Graham White, LJ Rich, Jeanette Percival and Tom Armitage.

-

Internet Research and Future Services section

The Internet Research and Future Services section is an interdisciplinary team of researchers, technologists, designers, and data scientists who carry out original research to solve problems for the 大象传媒. 大象传媒 focuses on the intersection of audience needs and public service values, with digital media and machine learning. We develop research insights, prototypes and systems using experimental approaches and emerging technologies.