Object-based media has been a focus of ����ý R&D’s work for many years, exploring how it can provide audiences with personalisable audio and visual content across radio, television and online media. Its potential goes beyond adaptive and immersive experiences and opens up new avenues for improving accessibility and better meeting the varied needs of our audiences. This A&E Audio trial has been supported by the EPSRC S3A: Future Spatial Audio Project and is a collaboration between ����ý R&D, Casualty, and the University of Salford. Lauren Ward, at ����ý R&D while completing her PhD, writes more about the background to the trial.

Hearing impairment affects the ease with which access audio-visual content. Hearing impairment not only causes the sounds in the world around you to be quieter, but can often cause speech to become jumbled and unintelligible, particular when there are many other competing sounds. This is why turning up the telly doesn’t necessarily make the content easier to understand: it just makes everything louder.

The ease of understanding speech on TV depends not only on hearing acuity, but many other factors too. , we can hear almost twice as well in the same level of background sound, than with an unfamiliar topic. Conversely, if a programme has a presenter we have never heard before, this will reduce our speech understanding, compared with a presenter we listen to every day.

Furthermore, what we enjoy and want out of TV audio also differs from person to person, and from programme to programme. The perfect football sound for one viewer, with a loud stadium sound (to feel like they are there, immersed in the crowd), for another viewer means they cannot hear the commentary.

Object-based media presents a potential way to accommodate this individual variability through delivering personalisable content, which viewers can adapt to their preferences and hearing needs.

The importance of narrative

In a single television show there are often hundreds, if not thousands, of separate audio clips. Hypothetically, object-based audio could allow you to individually alter the volume of all of them! However this would not make sitting down to watch TV an accessible, or relaxing, experience. We had to come up with a way to give the viewer as much control over the mix as possible, whilst making personalising the audio as simple as changing the channel.

Previous explorations in object-based audio have dealt with this issue by either segregating the sound into two categories: speech and non-speech or into . What these approaches didn’t take into account, however, was the information carried in non-speech sounds which are crucial to the narrative: consider the gunshot in a ‘whodunnit’ or the music in the film Jaws. Without these important sounds, the story just wouldn’t make sense.

Working on the A&E mix with the Casualty Post Production team at ����ý Roth Lock Dubbing Studio.

This is what makes the approach taken in the A&E Audio trial different. Through interviewing and surveying content producers, we developed a system which balanced narrative comprehension and creativity with the needs of hearing impaired listeners. In this system, sounds in a particular mix are ranked by the producer based on their ‘narrative importance’ (how crucial they are to telling the story).

The majority of producers agreed that a hierarchy with four levels gave enough flexibility, without being too cumbersome, to allocate sounds to. Dialogue is essential, and always ranked at the top. How the remaining elements are ranked by the producers depends on the content and how they fit into that content’s narrative.

In an object-based media piece the importance of each sound would be encoded in metadata. This means a single control, or “slider” as in the A&E Audio trial, can control each object differently, based on its metadata.

At one end of this slider is the full broadcast mix, with all objects reproduced at their original volume (in A&E Audio, this end of the slider has the TV mix). Then, as the slider is taken to the accessible end, essential sounds are increased in volume (dialogue), low importance sounds are attenuated, and important non-speech sounds remain the same.

In previous approaches to personalised audio, a beeping heart monitor in Casualty would be set as ‘background’ or ‘SFX’ - and attenuated the same regardless of its importance in the scene. Using the narrative importance approach, a beeping heart monitor included to give hospital ambience is low importance, and can be reduced in volume without affecting the narrative. A heart monitor indicating a character is going into cardiac arrest, however, is high importance and is always retained in the mix, to ensure the context of the dialogue is maintained.

This gives the audience control of the complexity of the mix, allowing them to find the balance of objects which give the best experience for them.

It also presents a low barrier to access as the slider is as simple to use as a volume control.

Prior to the public trial, we conducted focus groups of hard of hearing listeners to try out the control. The results were positive and participants noted that the approach improved the intelligibility whilst still maintaining the ‘depth’ and ‘colour’ of the content.

Focus group with target end users in the University of Salford’s Listening Room.

A&E Audio on Taster

This has been our first trial of the technology outside of the lab. Our next steps will be to analyse the feedback and data we have collected from the trial. This will allow us to determine what benefit this kind of personalised audio gives the audience, in particular for hard of hearing viewers, and guide where this work goes next. We also plan to explore the user experience of interface for controlling mix personalisation and improve the function of the interface on different browsers and platforms.

We also have a better understanding now of the production tools we would need to develop for this kind of personalised content to be made more regularly. Finally, a crucial part of our future plans is working towards standardisation and roll-out of object-based audio technology. We have been working with international groups like the and on the standardisation of such technologies in recent years. We hope that in future we can turn one-off trials like this into regular services for our audiences.

If you haven’t already tried the A&E Audio trial on Taster, it is available until the 31st August.

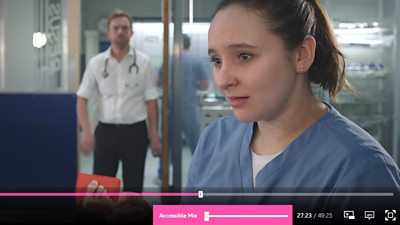

The episode of Casualty available on ����ý Taster - the slider is in pink and has selected the 'accessible' mix.

We would like to thank all those on the A&E Audio team that made this trial possible:

Dr. Matt Paradis (����ý R&D Engineer) Dr. Ben Shirley (University of Salford) Rhys Davies (Casualty Post-Production Supervisor) Robin Moore (����ý R&D Producer) Laura Russon (Casualty Dubbing Mixer) Gabriella Leon (Casualty) Joe Marshall (Casualty Social Media)

As well as all the people from ����ý R&D, Casualty and the University of Salford who helped develop the project and all our research participants, past and present, who have guided this work.

- Immersive Audio Training and Skills from the ����ý Academy including:

- Sound Bites - An Immersive Masterclass

- Sounds Amazing - audio gurus share tips

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.