As part of our Cloud-fit Production project, we've been investigating storing individual video frames in the cloud. In a previous blog post, we described how we'd tested a 20Gbps cumulative upload rate into an S3 bucket (we didn't test any further) and found there was a cost vs latency tradeoff; smaller objects mean lower latency, but require more cloud resources. We also promised we'd look into downloads. So how fast can we read objects out of S3? And while we're at it, what about ?

Read Performance

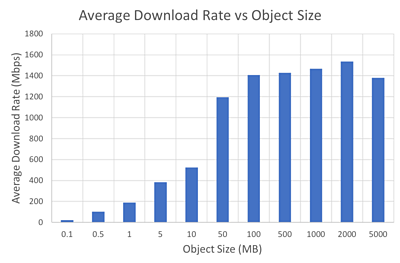

Well the results aren't all that surprising. As the graph below shows, we can download at about 1.4Gbps when objects are larger than about 100MB. For smaller objects, we found we can run several download workers in parallel to achieve similar overall rates as well. We generated these results by writing a large number of test objects into S3, reading them back on several EC2 compute instances, measuring how long each read took, and calculating and averaging the rate. All our experiments were fully automated; creating cloud infrastructure, running tests and analysing the data without supervision. We'll talk more about our automation tooling in a future post.

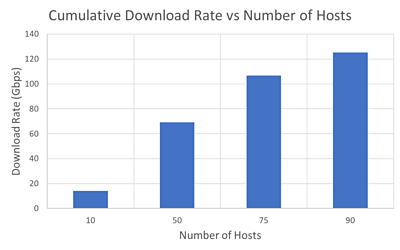

We also looked into how far we could scale; how many download hosts can we have before S3 starts to slow down? As with our write tests, per-host average download rate remains steady up to 90 hosts (as before, we didn't test any further). The cumulative download rate across all hosts tops out just above 120Gbps; more than enough for our needs!

Running our tests with a range of instance sizes shows the "c5.large" has the best balance of CPU and network performance for the price; this is true for both reading from and writing to the store. We also tested performance on the "t2.micro" burstable instance. The "t2" family have a low baseline performance level and they accrue over time, which can be spent to give temporary performance bursts. In the write test, "t2.micro" upload rate decayed steadily over time (due to running out of credits) however read test performance was stable for the duration. This suggests that reading is far less dependent on CPU, and is mostly limited by network I/O performance.

Threading

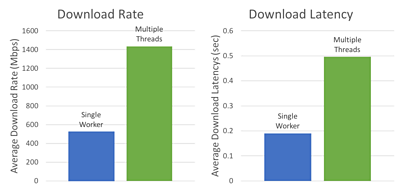

We've talked about parallelisation by running multiple EC2 instances, but it's also possible to run several workers on an instance; for example by using . Each thread is assigned a different frame or object to download, and several can be "in-flight" at a time. The operating system manages the schedule of when each thread runs, and threads which are "blocked" (for example waiting for a reply from S3) yield CPU time to those ready to go. However there are downsides to this approach; parallel processing adds significant complexity and scheduling is imperfect, so threads often have to wait to be scheduled even when they stop being "blocked". As a result the throughput (total download rate) increases because multiple workers are contributing, but each frame is "in-flight" for longer, increasing latency.

The diagram below illustrates how this fits together: a sequence of media objects exist in the store, each containing one second of video. Four readers (worker threads) run in parallel to retrieve objects. Each worker alone takes three seconds to download an object; but four objects are downloaded at the same time, so on average 1.33 objects are downloaded per second.

Our tests show that using multiple (10 to 20) read threads brings total download rate back up around 1.4Gbps for small 10MB objects. However this comes with some increase in latency; rising from 0.2 seconds to 0.5 seconds for 10MB objects.

Other Object Stores

A wider aim of R&D's Cloud-fit production work is to build , portable services; where components can be improved, swapped or run on different providers without major modification to the rest of the system. For example, the media object store service will be flexible to the underlying storage provider, while providing a well-defined API to other services.

Hiding the implementation behind a defined API means we can optimise the internal storage interface on a provider-by-provider basis. For example, an optimisation we use for AWS S3 is to spread object keys across the bucket to . The S3 version of the media object store service will then provide the mapping to convert object identity and timestamp to a key.

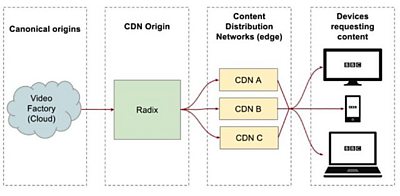

Many other object storage solutions are available from various public cloud providers; including some optimised for video assets such as . A relatively new addition to the AWS product lineup, it is intended to be an origin server which stores encoded live and on-demand segments for distribution through CDNs, much like the 大象传媒's . We've done some preliminary testing and found it to offer better end-to-end latencies than S3, although the maximum object size of 10MB is a little bit restrictive when working with uncompressed video.

Our Computing & Networks at Scale team are also working with an object store, , which powers the storage for their on-premise cloud.

We chose AWS and S3 as our initial focus because it is widely used elsewhere in the 大象传媒 (such as the time-addressable media store that powers iPlayer), and because how we use the object store is more important than the object store itself. Going forward we'll also be targeting R&D's on-premise OpenStack and Ceph implementation, and researching how to optimise Ceph clusters for high-performance broadcast applications as well.

What Next?

Along with experimenting with the performance of "pure" S3 object storage, we've also been building a more complete prototype of our media object store service. We've been using this to read and write real, uncompressed, video frames to S3 and measuring how it performs.

- Part 3 of this series talks about our experimental store, "Squirrel"

- Read Part 1 of this series.

- Sign up for the IP Studio Insider Newsletter:

- Join our mailing list and receive news and updates from our IP Studio team every quarter. Privacy Notice

- First Name:

- Last Name:

- Company:

- Email:

- or Unsubscribe

-

Automated Production and Media Management section

This project is part of the Automated Production and Media Management section