Voice-controlled technology is gaining traction as a new means and mode of accessing audio, including live broadcasting, catch-up programming or on-demand content. Providing more immediate access is an evident benefit of this recent innovation in consumer audio technology. However, current smart speaker interaction design relies heavily on the user’s memory and mood to direct listening decisions. Listeners simply cannot request what they do not know exists.

We collaborated with Rishi Shukla, a visiting researcher from , to investigate design for interactive, voice-led navigation through content for smart audio devices.

In this post, Rishi introduces the prototype he developed for ����ý Research & Development called the Audio Archive Explorer (AAE). It was developed to evaluate how effective discovery of audio content could be achieved through voice interaction alone. We also assessed how interactions and connectedness differed between the use of AAE on a smart speaker and on an emergent, audio augmented reality (or audio AR), smart headphone experience. Full details of the research project are outlined in our accompanying report, 'Voice-Led Interactive Exploration of Audio'.

System Design

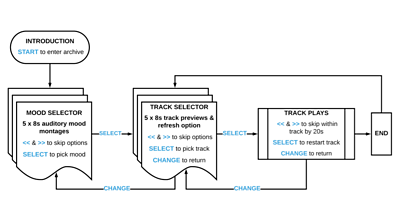

The AAE was designed to enable voice-led exploration of highlights from the ����ý’s Glastonbury 2019 coverage. Fifty live recordings were organised within one of five mood categories – ‘happy’, ‘sad’, ‘energetic’, ‘mellow’ and ‘dark’. All navigation was achieved purely through speech and audio playback, using just two menus of five cycling options within the structure represented below.

The design placed particular emphasis on discovery through listening. Enabling listeners to determine choices based on what they hear, rather than what they see, is a significantly different approach compared to typical ways that digital audio content is offered. The majority of music, radio broadcast or podcast navigation interfaces are visual-focussed, which is at odds with the fundamentally aural nature of the target material. We encouraged exploration and selection of pieces to be pursued based on eight-second mood montages and track previews, rather than listing the artist and title information. Spoken word metadata was only presented once a selection had been made and during playback, like radio presenter announcements.

Experience Design

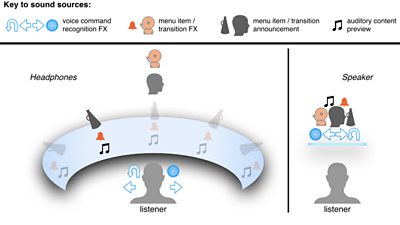

The presentation of content for the two simulations of AAE - headphone and speaker - was identical, other than the apparent spatial locations from which individual sounds emanated during navigation. By using an audio signal processing technique known as binaural synthesis, the smart headphone version positioned sound elements in different positions around the listener. In contrast, the smart speaker necessarily used standard mono playback for all audio components without any spatial segregation, as illustrated below.

Previous research in remote conferencing has demonstrated that spatial audio presentation of multi-talker conversations benefits memory, assignment of meaning or identity and comprehension. We wanted to explore whether similar attributes could be gained by the spatial presentation of mixed audio content that does not involve human-to-human interaction.

Evaluation

Twenty-two study participants were selected to achieve a desired balance of profiles to evaluate AAE. Studies were held in a user experience testing lab at the ����ý Broadcast Centre in London. 10 participants used the headphone version, and 12 used the speaker version to pursue the following task.

You have 15 minutes to explore the archive as far as you can and find six new tracks that you like. Use the pen and paper provided to make your list as you go. Make a note of the track names and artists that you choose and anything particular you liked about each one.

Participants were given general onboarding and high-level orientation to the prototype before commencing, but they were purposefully not told how to operate the system. Each was left alone to pursue the task independently. Data gathered during the testing sessions included: real-time logs of their voice interactions; self-reported qualitative usability ratings; a hand-drawn mental model of the system they experienced; video recordings of pre- and post-task interviews. The accompanying report presents a detailed analysis of interaction data, usability ratings and mental model drawings.

Findings and Next Steps

The report outlines evidence to suggest that the AAE prototype was conducive to audio content discovery and straightforward to navigate confidently and fluently, without detailed instruction before use. We did not find any evidence that binaural presentation enabled more effective engagement with the system on a practical level, though the report highlights aspects of the study design that might have reduced the likelihood of this emerging. However, participants’ mental model representations do suggest that headphone users had a noticeably different interactive experience with the content. Further insight into the overall usability of AAE and differences in the nature of the smart headphone and speaker versions will be gained from a detailed examination of the pre- and post-task interviews, which is currently underway. Three priorities are identified for potential future exploration of the AAE approach in general: testing with an extended task length; using gestural rather than voice input; using alternative content and browsing contexts.

The background motivation, conception, engineering, implementation, research study design, data analysis and conclusions are all presented fully in the 'Voice-Led Interactive Exploration of Audio' report.

- -

- ����ý R&D - Voice-Led Interactive Exploration of Audio

- ����ý R&D - Top Tips for Designing Voice Applications

- ����ý R&D - Emotional Machines: How Do People Feel About Using Smart Speakers and Voice-Controlled Devices?

- ����ý R&D - Talking with Machines

- ����ý R&D - User Testing The Inspection Chamber

- ����ý R&D - Prototyping for Voice: Design Considerations

- ����ý R&D - Singing with Machines

- ����ý R&D - Better Radio Experiences

-

Internet Research and Future Services section

The Internet Research and Future Services section is an interdisciplinary team of researchers, technologists, designers, and data scientists who carry out original research to solve problems for the ����ý. ����ý focuses on the intersection of audience needs and public service values, with digital media and machine learning. We develop research insights, prototypes and systems using experimental approaches and emerging technologies.