One of the aims of the ����ý Research & Development Audio Team is to enable the ����ý to deliver great listening experiences to the audience, regardless of the audio devices that they’re using.

We’re good at creating immersive listening experiences using spatial audio on loudspeaker systems with a high channel count (for example, 5.1 surround sound), or on headphones with binaural audio. But only a really low proportion of the ����ý audience have surround sound systems, and it’s not really appropriate to deliver shared experiences at home over headphones.

As one possible solution for this problem, we’ve been investigating the concept of device orchestration - that’s the idea of using any available devices that can play audio as part of a system for immersive and interactive audio playback. We’ve developed a framework for the key components of an orchestrated system.

- Pairing: adding devices into the system. We use a short numerical code that can be embedded into a shared link or a QR code.

- Synchronisation: making sure that the devices can play back audio in sync. We use the that was developed in the .

- Audio routing and playback: deciding what audio to play from each device and actually rendering it on the devices. Object-based audio means that we can keep different elements of the audio scene separate from each other and more flexibly assign them to devices.

We’ve recently launched a prototyping production tool called Audio Orchestrator. It enables quick and easy development of orchestrated audio experiences based on the framework described above. The tool is available through ����ý MakerBox, which is both a platform for creators to access tools to enable exploration of new technologies and a community for discussion and sharing of ideas.

In this blog post, I’ll briefly summarise our work leading up to the development of the prototyping tool, and introduce Audio Orchestrator and some of its features.

How did we get here?

In late 2018, we released a trial production—an audio drama called , as part of the . It worked as a standard stereo audio drama but also used the orchestration framework to allow listeners to connect extra devices and report where they were positioned. This allowed us to deliver immersive sound effects and additional storyline content that adapted to the number of devices available.

Producing The Vostok-K Incident was much more time consuming than a standard audio drama and required a bespoke toolchain. We evaluated listeners’ responses to the trial, finding that there were some significant benefits to this type of audio reproduction and also some factors to consider for future productions. One such suggestion was exploring different genres of content, not just audio drama. To do this, we needed to be able to create content much more quickly—and also enable other people to create experiences.

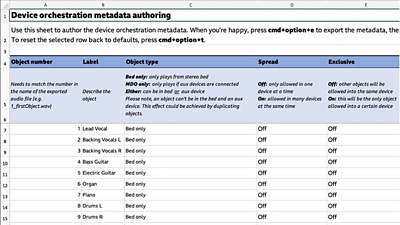

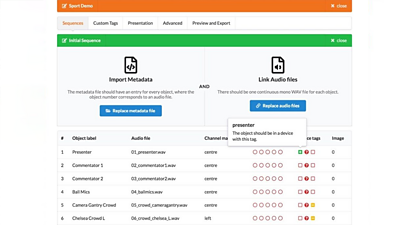

We planned a set of workshops with production teams to explore how they might want to use device orchestration in their work. We started building a simple prototyping tool so that we could quickly and easily build prototypes during those workshops. The first iteration of the prototyping tool required the user to write some metadata describing the orchestrated experience in an Excel spreadsheet and import that, alongside some audio files, into the tool. Users could then preview the experience they’d built in a basic template application.

Above: The metadata authoring spreadsheet used in the first iteration of the prototyping tool.Below: An early version of the prototyping tool user interface.

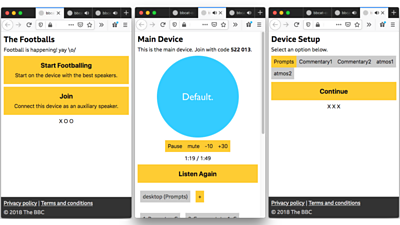

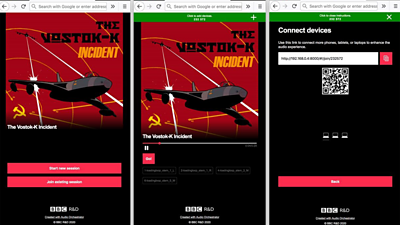

Above: An early version of the template application. On the left: starting a new session or joining an existing one. In the centre: the playing page. On the right: user controls on a connected device.

The first few workshops were enough to convince us of the value of developing the prototyping tool further so that it could be used to produce more, better orchestrated experiences.

Building Audio Orchestrator

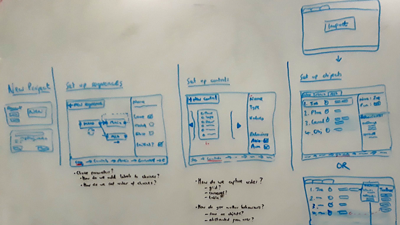

Based on what we learned from using the tool in workshops, we went back to the drawing board to make significant improvements to the framework and the prototyping tool.

- We reworked some of the underlying orchestration framework, taking on board some of the things that the workshop participants had wanted to achieve and making the framework much more flexible and easier to use.

- We redesigned the prototyping tool—integrating the metadata editing stage, adding new features, and designing a workflow that hit a good trade-off between flexibility and ease of use.

- We redesigned the prototype application template so that experiences looked better with minimal extra effort needed from the producer.

A whiteboard session to redesign the Audio Orchestrator workflow.

The final Audio Orchestrator workflow has three broad steps.

- Create your audio files. This happens outside of Audio Orchestrator—you can create audio files using whatever software you would normally use, but there are some requirements for how the files are formatted before they're added to the tool.

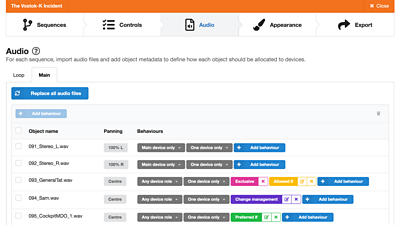

- Build the experience in Audio Orchestrator. When you’ve created audio files, they’re imported into the production tool, which is used to turn them into an orchestrated experience. You can set up a path through different sections of content, add controls for the listener so that they can interact with the experience, and add behaviours to your audio objects that determine how they’re allocated to the connected devices. You can also customise what the experience looks like—for example, by adding a cover image.

- Export your experience as a prototype application. The output of Audio Orchestrator is a prototype web application for your orchestrated experience. Once you’ve built your experience, you can either preview it locally in the browser, or export it to share with others.

Above: Adding behaviours to audio objects in Audio Orchestrator. Below: The prototype application. Left: start a new session or join an existing one. Centre: the playing page. Right: joining instructions, also showing how many devices are connected.

Growing a community of practice

I’ve given a very brief overview of how Audio Orchestrator works, but there’s .

Our main reason for making Audio Orchestrator available to the community is to investigate the creative opportunities of the device orchestration technology. The tool is available free for non-commercial use—you can request access from the MakerBox webpage. provides a meeting place for people working on orchestrated audio to share ideas, ask for help, and provide feedback on the process. We’ll also be using this to share example projects and our thoughts, as well as organising workshops and events.

We’re really excited to see what new experiences everyone creates!

- -

- ����ý MakerBox - Audio Orchestrator

- ����ý R&D - Vostok K Incident - Immersive Spatial Sound Using Personal Audio Devices

- ����ý R&D - Vostok-K Incident: Immersive Audio Drama on Personal Devices

- ����ý R&D - Evaluation of an immersive audio experience

- ����ý R&D - Exploring audio device orchestration with audio professionals

- ����ý R&D - Framework for web delivery of immersive audio experiences using device orchestration

- ����ý R&D - The Mermaid's Tears

- ����ý R&D - Talking with Machines

- ����ý R&D - Responsive Radio

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.