We are exploring how high-bandwidth low-latency mobile networks such as 5G can enable high-quality immersive experiences on mobile devices. Working with partners in the West of England Combined Authority鈥檚 5G Smart Tourism project, we are developing and trialling a VR/AR experience at the Roman Baths in Bath to test whether emerging network technology can support these kinds of experiences, and whether end users find them useful.

Project from 2018 - 2019

New Networks 鈥� New Possibilities

Modern mobile phones offer great possibilities for providing rich AR and VR content, although this can often require a large quantity of data, particularly where 360-degree videos or highly-detailed animations are involved. This often limits what is possible, particularly for users who are on a mobile network. In this project we are investigating whether 5G technology can enable such visually-rich experiences, and what users think about them.

We have been working with partners in the to focus on experiences that are designed to enhance a user鈥檚 visit to a particular place. We are developing and trialling an application that uses the so-called 鈥樷€� paradigm to show the user an alternative view of the place in which they are standing. This has obvious applications as a 鈥榳indow back in time鈥� for showing the history of an area, but could also be used to visualise other aspects, maybe though graphical overlays on a video captured in the present day to show information about the scene.

AR vs VR

This approach has much in common with augmented reality, although showing a fully-rendered (or 360 video) virtual reality view rather than adding overlays to the live video from the user鈥檚 phone has several advantages:

- There is no need for highly-accurate tracking of the device. The tracking only needs to be good enough to let the user understand the relationship between the real world around them and the image on their screen. In many cases the user would likely to be standing still and looking around them, so real-time positional tracking is not essential.

- There is no need for the user to have a good image from their phone camera. This means that they can use the app when they don鈥檛 have a good view of the scene (e.g. when people are standing in front of them or when the scene is poorly-lit), and they can hold the device at a position that is comfortable for them, rather than having to frame an appropriate image of the scene.

- 大象传媒 R&D - 360 Video and Virtual Reality

- 大象传媒 R&D - An Introduction to 大象传媒 Reality Labs

- 大象传媒 R&D - Research White Paper: Mobile Augmented Reality using 5G

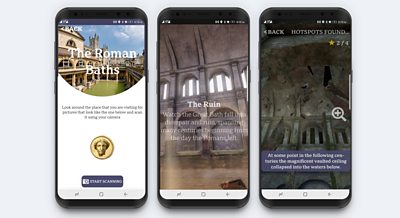

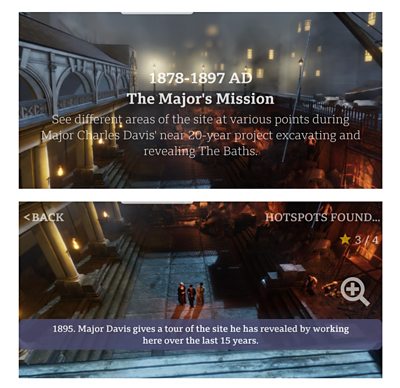

The Roman Baths Trial

In the we developed and trialled an application at the in Bath, to visualise the Baths at times before and after the Roman period, complementing the information currently provided to visitors about the Roman period. The app tells the story of three periods: the mythical discovery of the hot springs by King Bladud, the Baths falling into disrepair when the Romans left, and the renovation in Victorian times. Each period was recreated by as an animated 3D scene that plays for several minutes, with 鈥榟otspots鈥� that the user can discover to display interesting information about various parts. Each scene was designed to be viewed from a particular location in the Baths, and was triggered when the user points the phone camera at a picture mounted at the appropriate location.

- 大象传媒 R&D - 5G Trials - Streaming AR and VR Experiences on Mobile

- 大象传媒 R&D - 5G Smart Tourism Trial at the Roman Baths

- 大象传媒 R&D - New Content Experiences over 5G Fixed Wireless Access

Technical Details of the First Phase (Streaming 360 Video)

We initially investigated delivering the scenes as pre-rendered 360-degree video (4k x 2k resolution). We encoded the scenes using H264 at several bit rates in the range 5-40Mbit/s to investigate the trade-off between picture quality and loading/buffering time, and chose a rate of 10MBit/s. Conventional mobile networks (and many wi-fi installations) would struggle with the higher rates, particularly when there are around 20 simultaneous users in the same area, which is a realistic number for a site such as the Baths.

Our first set of trials were run at the Baths in December 2018 during closed evening sessions. The video will was hosted on 鈥榚dge鈥� servers in the 5G core network, set up by the . The core network was linked to the Baths using a 60GHz mesh network provided by project partner . The final link to the handsets used wi-fi, as 5G-enabled consumer handsets were not yet available. We logged technical data such as the loading/buffering times, as well as data about how the users explore the scenes, and gathering their opinions on the experience.

Second-Phase Trials (Remote Rendering)

We ran a second set of trials in March 2019, when we investigated an alternative approach for delivering VR to mobile handsets, using remote rendering technology to render the scene in an edge server in the 5G network. We investigated the use of scene rendering and in combination with the . The low latency capabilities of 5G allowed us stream device orientation data to the server and send back the rendered video with no apparent delay (we were aiming for less than about 200ms for the end-to-end process from the user moving their phone to seeing the image move on the display).

This approach offers several potential advantages over alternative approaches:

- Compared to streaming pre-rendered 360 video, this approach offers the possibility of additional user interaction (such as more accurately matching the viewpoint in the virtual scene to where they are actually standing), as well as lower network bandwidth.

- Compared to rendering the scene locally on the user鈥檚 device, this approach makes the application much more lightweight to download (making it more likely that a user will install it), and battery life will also be improved as the compute-intensive rendering happens remotely. It also ensures that all users (even those with older devices) see the same quality of the scene, as this is not dependant on the graphics rendering capability of their phone.

However, it requires GPU-equipped compute nodes to be available in the network.

We hope that the results of our work will help inform the 大象传媒 about how best to exploit the capabilities of 5G networks for delivering new kinds of user experience, as well as improving our general understanding of how to use VR and AR technology to educate and entertain 大象传媒 audiences.

Further details of the trials, including an analysis of the user feedback can be found in 大象传媒 R&D White Paper 348.

Related links

- -

- 大象传媒 R&D - Research White Paper: Mobile Augmented Reality using 5G

- 大象传媒 R&D - All of our articles on 4G and 5G including:

- 大象传媒 R&D - Broadcasting Over 5G - Delivering Live Radio to Orkney

- 大象传媒 R&D - 4G & 5G Broadcast

- 大象传媒 R&D - TV Over Mobile Networks - 大象传媒 R&D at the 3GPP

- 大象传媒 R&D - 5G-Xcast

- 大象传媒 R&D - 4G Broadcast for the Commonwealth Games 2014

- 大象传媒 R&D - 4G Broadcast technology trial at Wembley 2015 FA Cup Final

- 大象传媒 R&D - 4G Broadcast: Can LTE eMBMS Help Address the Demand for Mobile Video?

- 大象传媒 R&D - 5G Trials - Streaming AR and VR Experiences on Mobile

- 大象传媒 R&D - 5G Smart Tourism Trial at the Roman Baths

- 大象传媒 R&D - Unlocking the Potential of 5G for Content Production

- 大象传媒 R&D - Building our own 5G Broadcast Modem

- 大象传媒 R&D - Broadcast Wi-Fi

Project Team

Project Partners

-

-

Animation studio

-

The Roman Baths is one of the most popular tourist attractions in the UK. It is run by the Heritage Services section of Bath & North East Somerset Council

-

WECA is made up of three of the local authorities in the region 鈥� Bath & North East Somerset, Bristol and South Gloucestershire.

Project updates

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.