Overview

In our article How research is different for UX writing we looked at how different user research methods can be adapted when the aim is to gather insight for copy and content design. We went through some of the most common methods like click tests, MVT test and quick exposure tests.

Following on from that, we can also look at how more advanced methods 鈥� the less common, the niche, and the resource-heavy 鈥� could be approached slightly differently to deliver clearer insights for UX writing.

Field studies

What:

Qualitative (and sometimes quantitative) research of varying types that take place when the product is being used naturally, rather than for the express purpose of research.

Things you might learn:

- in situ mindset

- user attitudes

- pain points

Special considerations:

Field studies tend to be small. That means they prove more useful towards the start of content design rather than at the end of the process.

To make sure any identified issues that need copy solutions are trends, it's worth corroborating the results with larger scale studies. Even then, copy will need to be balanced with the 'less is more' principle.

Clickstream analysis

What:

Quantitative research, largely from analytics software, that looks at the flow of live screens that users take to complete a task.

Things you might learn:

- problematic user journeys

- opportunities for better content design

- effect of tone on end-to-end user journeys

Special considerations:

Clickstream analysis will tell you what a user has clicked on, but not their understanding of it. This means it should be used in conjunction with other methods that show comprehension.

Because it looks at the full journey rather than just one screen, it can be useful when comparing two sets copy that are similar in instruction but are different in tone, to understand which converts more successfully.

For more robust data, also look at the time spent for each journey combination.

(Modified) Cloze test

Type:

Qualitative or quantitative test that hides every xth word from view, so that participants guess the word themselves, which shows their level of comprehension.

Things you might learn:

- terminology

- comprehension

Special considerations:

Cloze tests are traditionally used for longer form copy. But I've repurposed the principle for short form/microcopy. Just remove key words to see if users might get the gist when scan-reading.

It's also useful to find out natural, everyday language. How participants fill in the missing words will show the most popular and understood terms. Just be careful there are no leading words in the instructions of the test.

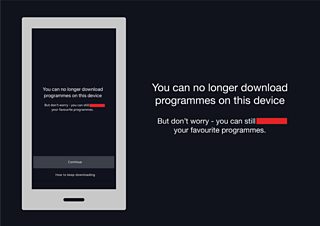

Example:

'You can no longer download programmes on this device. But don't worry 鈥� you can still __ your favourite programmes.'

Results:

Watch - 51%

Stream - 15%

View - 5%

Download - 5%

Enjoy - 3%

Access - 3%

See - 2%

Other/ n/a - 16%

Highlighter tests

What:

Quantitative research often used in long-form copy. A user reads a passage of text, and marks in different coloured highlighter the parts that makes them feel confident or less confident (or is clear/unclear etc).

Things you might learn:

- clarity

- pain points

- content hierarchy

Special considerations:

How often a section is highlighted will show the areas that are clear, important or that need reworking. But only some of that will be about the way it's written 鈥� highlighted sections might also be more about the content than the writing. For example, a sentence about data sharing may be highlighted however it's worded. The impact of wording can be tested by using different copy variations.

Moderated usability studies

What:

Qualitative research in which a moderator interviews a participant about their experience. Usually the moderator asks a participant to complete a task, and then asks them questions about how they found it. This usually involves asking about the copy.

Things you might learn:

- pain points

- comprehension

- user sentiment/brand perception

Special considerations:

Critically examine the responses before accepting them as insights. This is because copy is usually absorbed subconsciously, not noticed or elicits no strong feelings. So the expectation to comment while being interviewed will artificially elevate the focus and feeling towards the copy.

Participants might also say they want extra copy to aid comprehension. This need to be taken with a pinch of salt, because the case for clean and simple screens is often stronger on balance, and does better in resulting studies and in live.

When moderators dig into comments, it can help draw out deeper insights but it comes with the risk of imparting bias. So take care not to use specific key terms or phrases when writing discussion guides or moderating.

Card sorting and tree testing

What:

Qualitative and/or quantitative research to show how participants understand the labels and categories of the navigation menu. In card sorting, participants group together topics in a way that's logical to them, and assess the category names.

Tree testing works in reverse: participants select categories, and drill down the hierarchy in order to find a required topic.

Things you might learn:

- architecture

- labelling

- terminology

Special considerations:

Repeating card sorts and tree tests with variants of words will show their effect in grouping and architecture.

These tests are best performed when there's only words and no design to guide the participant 鈥� that way it keeps the focus on the logic of the wording.

Eye tracking

What:

Qualitative research in which physical equipment is used to track where a participant looks on a screen.

Things you might learn:

- propensity to scan-read

- content placement

- comprehension

Special considerations:

It's known that users read paragraphs in an 'F'-style formation, but eye tracking gives more detail. It can show where the user's eyes rest on the screen, which elements are seen/read, and which are missed. This can help in content design, and optimising the length of copy on a screen.

Used in conjunction with comprehension questions after, it can give an insight into whether copy was misinterpreted, scan-read, or missed.

Bear in mind current tech can make it artificial for the participant, as they're conscious about where they're looking. Also, data can be noisy, it can be difficult with spectacle wearers etc, and both equipment and recruitment can be expensive.

Search term analysis

What:

Quantitative research in which search terms are analysed to prove what's being used and how much, increasing or decreasing popularity, and whether it's used as much as a similar term. Google Trends is a popular tool for this.

Things you might learn:

- terminology

Special considerations:

This is useful for comparative popularity of terminology, though only when the terms are interchangeable.

In Google Trends, the results are displayed in relative terms only. So it's not possible to see how popular a term is in absolute figures, or within searches about a particular subject matter. For example, you can check the popularity of 'Wireless speaker' compared to 'Bluetooth speaker', but it won't tell you whether they've increased in popularity as an audio device.

Illustrations by Poorume Yoo.